A researcher from Johannes Kepler University has introduced GateLoop, a novel sequence model that leverages the potential of linear recurrence for efficient long-sequence modeling. It generalized linear recurrent models and outperformed them in auto-regressive language modeling. GateLoop offers low-cost recurrent and efficient parallel modes while introducing a surrogate attention mode that has implications for Transformer architectures. It provides data-controlled relative-positional information to Attention, emphasizing the significance of data-controlled cumulative products for more robust sequence models beyond traditional cumulative sums used in existing models.

GateLoop is a versatile sequence model that extends the capabilities of linear recurrent models like S4, S5, LRU, and RetNet by employing data-controlled state transitions. GateLoop excels in auto-regressive language modeling, offering both cost-efficient recurrent and highly efficient parallel modes. It introduces a surrogate attention mode with implications for Transformer architectures. The study discusses key aspects like prefix-cumulative-product pre-computation, operator associativity, and non-data-controlled parameterization. GateLoop is empirically validated with lower perplexity scores on the WikiText103 dataset. Existing models are shown to underutilize linear recurrence’s potential, which GateLoop addresses with data-controlled transitions and complex cumulative products.

Sequences with long-range dependencies pose challenges in machine learning, traditionally tackled with Recurrent Neural Networks (RNNs). However, RNNs face vanishing and exploding gradients, hindering their stability for lengthy sequences. Gated variants like LSTM and GRU alleviate these problems but must be more efficient. Transformers introduced attention mechanisms for global dependencies, eliminating recurrence. Although they enable efficient parallel training and global pairwise dependencies, their quadratic complexity limits use with long sequences. Linear Recurrent Models (LRMs) offer an alternative, with GateLoop as a foundational sequence model generalizing LRMs through data-controlled state transitions, excelling in auto-regressive language modeling, and providing versatile operational modes.

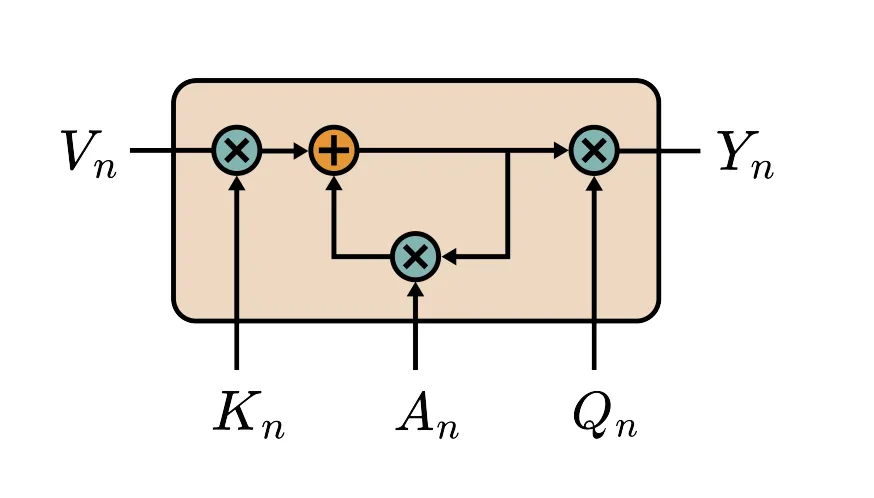

GateLoop offers an efficient O(l) recurrent mode, an optimized O(llog2l) parallel mode, and an O(l2) surrogate attention mode, providing data-controlled relative-positional information to Attention. Experiments on the WikiText-103 benchmark demonstrate GateLoop’s autoregressive natural language modeling prowess. A synthetic task confirms the empirical advantage of data-controlled over non-data-controlled state transitions. Key aspects include prefix-cumulative-product pre-computation and non-data-controlled parameterization to prevent variable blow-up.

GateLoop, a sequence model incorporating data-controlled state transitions, excels in auto-regressive language modeling, as demonstrated in experiments on the WikiText-103 benchmark. It achieves a lower test perplexity than other models, highlighting the practical benefits of data-controlled state transitions in sequence modeling. GateLoop’s capacity to forget memories input-dependently allows it to manage its hidden state effectively for relevant information. The research outlines future research possibilities, including exploring initialization strategies, amplitude and phase activations, and the interpretability of learned state transitions for deeper model understanding.

GateLoop, a fully data-controlled linear RNN, extends existing linear recurrent models through data-controlled gating of inputs, outputs, and state transitions. It excels in auto-regressive language modeling, outperforming other models. GateLoop’s mechanism provides relative positional information to Attention and can be reformulated in an equivalent surrogate attention mode with O(l2) complexity. Empirical results validate the efficacy of fully data-controlled linear recurrence in autoregressive language modeling. The model can forget memories input-dependently, making room for pertinent information. Future research avenues include exploring different initialization strategies, amplitude, and phase activations and enhancing the interpretability of learned state transitions.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 32k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on Telegram and WhatsApp.

The post Johannes Kepler University Researchers Introduce GateLoop: Advancing Sequence Modeling with Linear Recurrence and Data-Controlled State Transitions appeared first on MarkTechPost.

#AIShorts #Applications #ArtificialIntelligence #DeepLearning #EditorsPick #MachineLearning #Staff #TechNews #Technology #Uncategorized [Source: AI Techpark]