While AI tools are incredibly useful in a variety of industries, they truly shine when applied to solving problems in scientific and medical discovery. Researching both the world around us and the bodies we inhabit has consistently resulted in better lives for everyone, and AI tools offer a great leap forward for these efforts. Much of science is tedious, boring work that demands repetition and close attention – both of which are areas that AI accel.

Therefore, it makes sense that Stanford’s Institute for Human-Centered AI (HAI) introduced the new Science and Medicine chapter of its annual AI Index Report. This chapter dives into the most important scientific and medical innovations in AI this past year, as well as a discussion of how these tools are being used.

To learn more about this section of the AI Index Report, we spoke with Nestor Maslej. Maslej is a research manager at Stanford HAI and was also the Editor-in-Chief of this year’s AI Index Report. His insights into the chapter – especially concerning the major medical innovations – can help explain just how important AI is to scientific discovery.

“AI is being used to solve problems that previously required a large amount of computational resources,” Maslej said. “AlphaMissence, a new model from Google, successfully classified the majority of 71 million possible missense variations. Previously, human annotators were only able to classify 0.1% of all missense mutations. There are many problems that require brute-force calculations to solve, and AI is proving to be a practical tool in solving these problems.”

AI Science and Medicine Tools

The “brute-force calculations” that Maslej mentions can be found throughout scientific and medical research. Much of science is about testing the same experiment – or similar experiments – over and over again until enough data is accumulated to reach a conclusion. Science will never escape drudgery, but AI offers a chance for researchers to leave some of the boring work to machines.

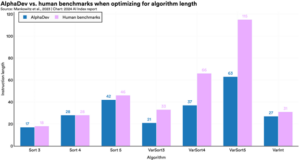

While there were many scientific AI tools to come out of the past year, the AI Index Report discussed a few particular ones in detail. AlphaDev, an AI system by Google DeepMind to make algorithmic sorting more efficient, was one of the more interesting systems to be addressed in this year’s report.

At its most basic, AlphaDev begins with a random algorithm and then tries to improve upon it by making small changes and testing it. If the change makes the algorithm faster, AlphaDev keeps it. In fact, Google’s DeepMind reported that AlphaDev uncovered new sorting algorithms that led to improvements in the LLVM libc++ sorting library that was up to 70% faster for shorter sequences.

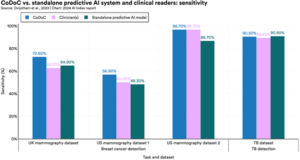

As for medical research, Maslej is interested in the Complementarity-Driven Defferal to Clinical Workflow (CoDoC) system. As AI medical imaging systems continue to improve, medical professionals must begin to think about when and where these tools are used. CoDoC is a system to identify when clinicians should rely solely on AI for diagnosis or defer to traditional clinical methods.

“I am especially excited about tools like CoDoC, which combines the best of AI medicine with human medical expertise,” Maslej said. “CoDoC enhances both the sensitivity and specificity of evaluation across several datasets and outperforms clinician evaluations and the evaluations of a standalone AI model. I like CoDoC in particular because I believe AI should always be a tool used alongside humans. CoDoC is a great example of this kind of integration in the medical field.”

Maslej makes an incredibly valuable point about AI tools – no matter how useful they are, they’re still only tools. Humans must be actively involved in the work performed by machines, both at a research level as well as in how these tools are regulated and used.

Handling the Influx of this Technology

One of the major pieces of information to come out of this chapter of the AI Index Report is that the number of FDA-approved AI-related medical devices has increased more than 45-fold since 2012. While the potential applications of this technology clearly have value to the medical community, there is still a discussion to be had about how society balances the need for rigorous testing and validation of these devices with the desire to rapidly bring AI tools to market.

“A huge part of the integration of any technology into society is trust,” Maslej said. “If people do not trust the technology, there is likely to be less integration of it in the future. There is already evidence that people in many Western nations, especially the United States, have low levels of trust regarding AI. We need to prioritize integrating AI tools in a way that ensures they are properly vetted and tested.”

Maslej discussed the potential data governance and security risks that arise from the release of sensitive patient data. Science can only advance with a large amount of information about a particular subject, but medical data specifically often holds private and potentially embarrassing data about patients. The integration of AI tools must keep this need for privacy in mind.

Additionally, there are explainability problems when it comes to AI tools and the “black box” nature of their functionality. The entire point of scientific research is to explain why something is happening, and AI is often unable to explain why it makes certain recommendations.

Maslej is also quick to point out that how these models are trained is often just as important as what they are trained to do.

“It’s important to consider the capabilities of some of these tools and understand the contexts in which they are being tested versus being deployed,” Maslej said. “Oftentimes, these tools are tested on populations that are not representative of those on which they are being deployed. This can create significant differences in performance. Transparency about testing versus deployment differences is important.”

The integration of AI tools into scientific and medical research holds immense promise, but it must be carefully managed to ensure trust, privacy, and transparency. While AI can automate particularly tedious tasks and uncover groundbreaking discoveries, it is ultimately a tool that must be wielded responsibly alongside human expertise.

#Academia #AI/ML/DL #Healthcare #Slider:FrontPage #AIIndexReport #Stanford #StanfordHAI [Source: EnterpriseAI]